“I feel that the four big AI CEOs in the U.S. are modern-day prophets with four different versions of the Gospel ..telling the same basic story that this is so dangerous and so scary that I have to do it and nobody else.”1

(Max Tegmark)

We enter a new age of AI , with some American snake-oil salesmen promising AI (and themselves) as a saviour to solve all of our ills (a new era of ‘psychohistory’ – but more Wizard of Oz style). Thank goodness for Demis Hassabis.

However, in this article (for which I used AI for research and content), I contend that we can also use of AI as part of a new integrated social science and as a powerful tool for public policy guided by our demand for a fairer safer society and sustainable economic policies.

AI has a stronger role to play in managing our socio-economic and ecological issues and creating a fairer society if we manage the risks carefully. The realisation of AI’s benefits is entirely contingent on who controls the technology and for what purpose. Unless we rebuild our political and economic systems, AI will too often be a super-weapon used by the powerful few against the many.2

The twenty-first century is defined by complex, interconnected crises, from climate collapse to rampant inequality.

For any progressive movement dedicated to systemic, structural reform and social justice, traditional policy methods (often based on linear or speculative assumptions) are no longer sufficient. A need highlighted by the failure of conventional models to anticipate or explain systemic shocks like the 2008 global financial crisis (GFC). As former European Central Bank President Jean-Claude Trichet noted during the global financial crisis, policymakers felt “abandoned by conventional tools” as existing macro models “failed to predict the crisis and seemed incapable of explaining what was happening.” This analytical gap has created a strategic imperative for more adaptive and granular methods.

Artificial Intelligence (AI) is not a utopian solution, but it is an essential tool for achieving genuine societal resilience.

We must strategically deploy AI for public good while embedding robust guardrails—such as transparency, accountability, and citizen participation—to prevent the technology from becoming a tool for centralized control, commercial co-option and environmental harm.

This article outlines the applications of these computational paradigms—data-driven forecasting and agent-based simulation—their core challenges, and the profound governance imperatives that accompany their adoption.

The dual emergence of Artificial Intelligence (AI) and Computational Social Science (CSS) offers a transformative response to the changes and challenges we face. Together, these tools enable a shift from static, equilibrium-based analysis to dynamic, data-rich modelling (which is necessary for the chaotic non-linear agent based world we live in) in a reinforcing positive synergistic loop:

data-driven forecasting helps predict what might happen

while agent-based simulation helps explore why it might happen and test the impact of “what if” policy interventions

Bank Fraud: Before deep diving in, let us begin by using an easy to understand example of the predictive power of AI-driven pattern analysis combined with the explanatory and exploratory capabilities of LLMs and agent-based simulations. Let’s use the example of AI tools for payments fraud management – this can involve three different interacting AI tools:

Machine Learning (ML): Great for fraud pattern analysis and prevention (real-time detection, risk scoring, anomaly identification).

Large Language Models (LLMs): Useful for agent testing (specifically, acting as sophisticated “adversarial agents” or to help create realistic synthetic attack scenarios to challenge ML models).

Agent-Based Modelling (ABM): Useful for systems modelling (simulating the dynamic, complex interactions between various entities—customers, fraudsters, banks—to understand systemic risk and network effects).

AI is not usually a homogenous singular program (or a magic black box to simulate human intelligence). It is a collection of different technology tools that are more or less integrated to achieve a target outcome.

There are critical technical and resource barriers to implementation of suitable AI tools for public goods – and crucial societal and governance challenges that must be navigated if we are to harness these new technologies responsibly and ensure the benefits to all outweigh the costs and risks for many.3

Optimising Economic Justice and Policy

Designing Optimal Progressive Taxation: We can utilise frameworks like the “AI Economist,”5 which employs Multi-Agent Reinforcement Learning (MARL), to model sophisticated economic systems. Simulations show that AI-designed tax policies consistently achieve a better balance of equality and productivity, leading to higher overall social welfare compared to existing models. This helps ensure that progressive tax reforms better achieve their intended outcomes and are not just popular populist slogans (which may in fact worsen the economy and the unequal distribution of wealth).

Strategic Shock Modelling: For major structural changes, such as nationalising utilities6 (like Thames Water, as the Green Party UK have promised to do7), advanced AI models based on game theory can simulate the actions of diverse stakeholders and market forces (in addition to the expected captured media resistance). This strategic foresight helps policy designers select optimal legislative and communication pathways to maximise the chance of a successful, non-disruptive transition.

Policy Automation: AI-Enhanced Policy (AEP) tools can automate and accelerate necessary regulatory processes, such as Automated Regulatory Impact Assessment (RIA), crucial for governing during periods of planned structural change.

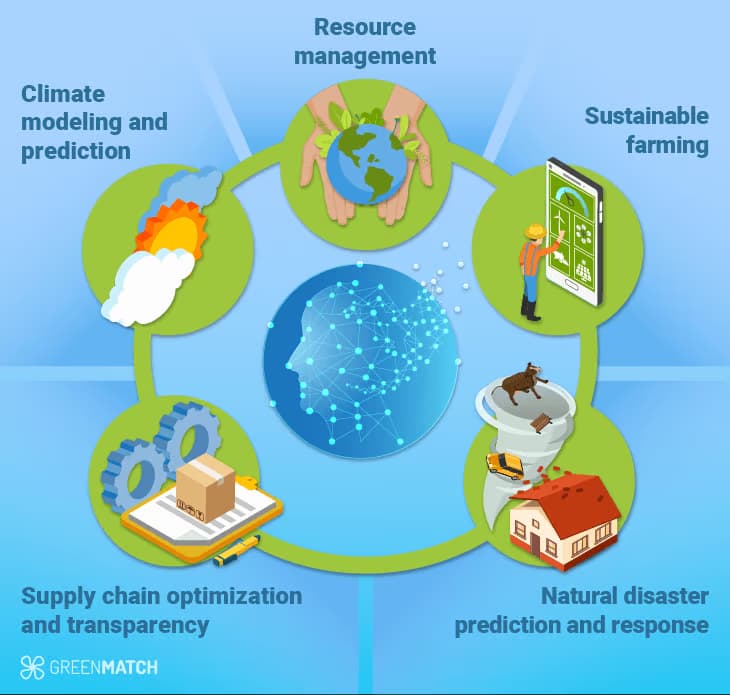

The green transition demands technological sophistication, which AI can deliver. AI offers transformative solutions essential for decarbonisation and ecological resilience.

Smart Grid Optimisation: AI is leveraged for optimising the flexible energy grid and enhancing demand-side management to accelerate renewable integration. Implementing advanced neural networks for forecasting wind and solar power output can increase the value of renewables and reduce the necessity for high-carbon backup sources.8

Planetary Monitoring: AI-powered systems can revolutionise biodiversity monitoring9 by processing vast datasets from Earth Observation (EO) and Autonomous Underwater Vehicles (AUVs). This enables rapid identification of invasive species, early detection of harmful algal blooms (HABs), and near-real-time tracking of changes in marine ecosystems.10

Green Science Discovery: AI can dramatically accelerate the discovery of new materials, such as Metal-Organic Frameworks (MOFs), which are critical for green technology applications like direct-air carbon capture.11

Public Safety and Health: AI can be used to model and analyse extreme weather events (like floods and droughts)12 to improve disaster preparedness and optimise resource allocation. In urban areas, AI tools like DeepMind’s “Green Light” can optimise traffic lights to reduce stop-and-go traffic, lowering vehicle emissions and improving urban air quality.13

The strategic importance of data-driven forecasting is growing rapidly. Fueled by the proliferation of big data from digital sources and the maturation of advanced machine learning algorithms, this approach represents a significant leap beyond traditional forecasting methods. It enables more granular, timely, and adaptive predictions for economic, environmental, and social systems, offering policymakers more actionable and responsive insights.

Current Applications and Methodologies

AI-powered forecasting is being applied across a diverse range of domains, leveraging machine learning to identify complex patterns and relationships that are often invisible to conventional analysis.

Agent-Based Modelling (ABM) offers a fundamentally different “bottom-up” paradigm for analysis.15

Instead of modelling aggregate variables, ABM simulates macroeconomic and social dynamics from the ground up, based on the interactions of numerous heterogeneous “agents” (e.g., households, firms, individuals).16

Its strategic value lies in its ability to explore out-of-equilibrium dynamics, path dependencies, and emergent phenomena—complex system-wide behaviours that arise from simple micro-level interactions and are often beyond the scope of standard equilibrium models.

Specific applications include stress-testing economic policies against a range of scenarios, assessing the equity implications of urban planning decisions, and modelling the effectiveness of public health interventions. This moves policymaking from a reactive posture to a proactive, experimental one. These simulation techniques provide a virtual laboratory for policy experimentation. However, their reliability is contingent on overcoming significant data, validation, and resource hurdles—challenges they share with the data-driven forecasting models.

Core Principles and Methodologies

The ABM approach is defined by its focus on individual actors and their interactions within a simulated environment.

Defining the Agent: An “agent” is a computational entity characterised by four key abilities: autonomy (acting without direct control), social ability (interacting with other agents), reactivity (perceiving and responding to its environment), and pro-activeness (taking initiative to pursue goals).

Contrast with System Dynamics (SD): While ABM focuses on micro-level interactions to understand emergent macro behaviour, SD modelling takes a top-down, aggregate view of a system, focusing on the feedback loops and flows between high-level components.

Standardisation and Transparency: To improve consistency and reproducibility, the ODD (Overview, Design Details concepts)17 protocol has been developed. It provides a standard framework for describing agent-based models, making them more transparent and easier for others to understand, critique, and replicate.

Applications in Policy Analysis and Social Science

By simulating the behaviour of individual actors, ABM (and related computational models) provide a virtual laboratory for exploring complex policy questions across a wide range of fields.

Macroeconomic Policy Analysis: Large-scale models like Eurace@Unibi18 are used to analyze the effects of economic policies by capturing the heterogeneity of firms and households. For example, these models can study the impact of labour market frictions on economic cohesion between different regions, revealing dynamics that are obscured in representative-agent models. They have also been used to model the effects of Basel II/III banking regulation on the financial fragility of the macroeconomic system.19

Public Policy Experimentation: Simulation allows policymakers to explore policy trade-offs and potential unintended consequences in a controlled, virtual environment. This approach has been used to evaluate complex scenarios such as the effectiveness of different COVID-19 mitigation strategies20 and the potential impact on crime rates of shifting municipal funding between policing and social programs. However, studies on the impact of the use of AI during COVID-19 are also crucial to situate this in a wider social context.21

Technology and Innovation Diffusion: ABMs are widely used to model how new technologies, behaviours, or ideas spread through a population.22 These models can simulate the adoption of rooftop solar panels or energy-saving practices, taking into account factors like social influence, network structure, and consumer preferences to inform policy interventions.

Long-Term Historical Analysis: Projects like Seshat: Global History Databank23 demonstrate the power of computational methods for long-term social science. By converting vast amounts of historical and archaeological information into structured, machine-readable data, researchers can rigorously test competing theories about the evolution of complex societies and the drivers of inequality over millennia.

Despite their transformative potential, policymakers must recognise that AI and computational models are not turnkey solutions. Their development and deployment are resource-intensive and confront significant operational and technical challenges related to data quality, model validation, computational cost, and human capital. Policymakers also need to identify AI and technology champions, that share their core social and political concerns, to help drive careful technological adoption in their social and political movements.

Data-Related Challenges

Data Quality and Bias: The performance of any AI model is contingent on the quality of its training data. Datasets require rigorous cleaning and pre-processing to handle missing values, outliers, and noise. More critically, inherent biases in data—such as representation bias (under-representing certain groups, e.g., a medical dataset that under-represents women, leading to less accurate diagnoses), measurement bias (inaccurate data collection), and confirmation bias (reinforcing existing beliefs)—can lead to unfair, inaccurate, and discriminatory outcomes, particularly for marginalised communities.

Data Accessibility and Infrastructure: The effective use of these technologies depends on reliable infrastructure, including electricity, internet connectivity, and data storage. The “digital divide” remains a key barrier, as limited access to digital devices and connectivity in developing or underserved regions prevents equitable access to AI-driven resources and widens existing disparities.

Interpretability and the “Black Box” Problem: The complexity of many advanced AI models, particularly in deep learning, creates the “black box” effect where the reasoning behind a prediction or decision is not easily understood. This lack of transparency can erode trust, hinder accountability, and limit the model’s utility in high-stakes domains like medicine and criminal justice, where explaining the rationale for a decision is critical.

Validation and Calibration: Validating complex simulation models is a persistent challenge, especially when comprehensive real-world data for direct comparison is unavailable. For ABMs, an “indirect calibration” approach is often used, where the model is validated by its ability to reproduce known real-world patterns or “stylised facts” (e.g., business cycle fluctuations). This often requires a layered validation approach that incorporates feedback from domain experts to ensure the model’s assumptions are plausible.

Human Behaviour Modelling: Representing human decision-making is a primary challenge in social simulation. Human behaviour is not easily reduced to simple rules; it is a complex interplay of social interactions, learning, memory, and emotions. Capturing this richness and heterogeneity accurately within a computational model remains a significant hurdle. Gamification and game theory are key conceptual and operational tools within such AI models.

Resource and Capacity Hurdles

Computational Cost: The financial and environmental costs of AI can be substantial. Training large-scale models, consumes massive amounts of electricity and generates a significant carbon footprint. For example, training GPT-3 was estimated to release 552 metric tons of carbon dioxide, equivalent to the annual emissions of 123 petrol cars.24

Skills Gap: A significant shortage of skilled personnel exists. Training in computational methods is not yet a standard part of most social science curricula in the UK, creating a gap between the demand for data scientists and modellers and the available supply. However, training is available through dedicated university centres, like the Nuffield Foundation funded Q-Step Centres25, and specialised Master’s programs offered by institutions such as The University of Manchester. More drive and engagement is needed in this area and it should be an area the UK is a world leader in (if it could get its act together).26

Strategic Implications for Policymakers

Addressing these barriers requires a deliberate, multi-pronged policy strategy:

First, to combat data bias, policymakers should mandate the development of data quality standards and fairness audits for AI systems used in the public sector.

Second, bridging the digital divide necessitates targeted investment in broadband infrastructure for underserved regions.

Third, the skills gap requires strategic investment in human capital. Policymakers should consider funding interdisciplinary university programs that merge social and computer sciences, expanding public-sector data science fellowships, and partnering with AI institutes and UK based development and commercial projects (like Deep Mind) to up-skill colleagues and the civil service.

Finally, mitigating computational costs involves promoting research into more energy-efficient AI architectures.

These technical and operational challenges do not exist in a vacuum, they create and compound broader societal risks and governance imperatives that must be addressed proactively.

Amplification of Socio-Economic Inequality

There is documented evidence that the current trajectory of AI investment is linked to rising income disparities.27 This occurs through several mechanisms:

AI disproportionately benefits highly skilled workers while displacing lower-skilled jobs involving repetitive tasks. This accelerates the need for universal basic income (UBI) to be implemented28 ad well as the use of AI to monitor and take action on poverty, deprivation and inequality.29

It concentrates wealth among technology owners and a small number of dominant “superstar firms” that can afford the immense capital and talent required for cutting-edge AI development.

This dynamic has given rise to concerns about what economist Jean-Paul Carvalho has termed “techno-feudalism”

A future where the dispensability of human labour could sharply increase the power of financial capital, creating unprecedented divisions between a technological elite and the general population. This also requires major publicly funded re-skilling initiatives for renewable energy sectors, data science, AI and other skills essential for a high value service based economy (as well as a rejuvenated manufacturing base in the UK).

Erosion of Democratic Norms and Concentration of Power

AI also poses a significant risk to democratic governance by creating powerful tools for surveillance and control.

Elite Domination: Empirical research shows that in many Western democracies, policy decisions already strongly align with the preferences of the wealthy rather than average citizens. AI could exacerbate this by giving powerful interests new tools for influence.

Surveillance & Control: AI-powered surveillance systems facilitate the detection of “subversive behaviour” and can discourage dissent. The extensive use of AI for social control and management of people, as seen in the Occupied Palestinian Territories and China, demonstrates how this technology can be used to entrench authoritarian tendencies and erode civil liberties.30 The USA appears to be desperate to also become a leader in this space and the UK is likely following it (as usual!).

Establishing Robust Governance and Regulatory Frameworks

To navigate these risks, governance frameworks must be built on a foundation of core ethical principles:

Accountability and Governance: Clear lines of responsibility must be established for the outcomes of AI systems across their entire life cycle, from development to deployment.

Transparency and Explainability: The “black box” problem must be mitigated to ensure I-driven decisions can be understood, scrutinised, and challenged by those they affect.

Fairness: It is imperative to actively identify and mitigate biases within AI systems to prevent the perpetuation of historical and systemic discrimination.

Safety, Security & Robustness: AI models must undergo rigorous validation and testing to ensure they are reliable, secure from manipulation, and perform as intended.

Human Oversight: AI should be designed to augment, not replace, human judgment. For critical decisions, a human must remain “in the loop” (directly involved) or “on the loop” (able to intervene and override the system).

Globally, different regulatory strategies are emerging. The UK has adopted an incremental, sector-led approach, empowering existing regulators to develop context-specific rules. In contrast, the European Union has pursued the comprehensive, risk-based AI Act, which classifies AI systems into different risk tiers with corresponding legal obligations.

Ultimately, the successful and beneficial integration of AI into society turns on public trust. Various survey data reveals that a lack of trust is a major barrier to adoption, including a lack of trust in AI-generated content and concerns about privacy and data security.31 Critically, there is a strong positive correlation between public engagement and interest in deploying AI for useful purposes and trust in a government’s ability to regulate it effectively. This underscores that robust, transparent, and accountable governance is not a barrier to innovation but a prerequisite for it.

Strategic Implications for Policymakers

Effective governance is the primary tool for mitigating societal risks and building public trust. Policymakers must move beyond high-level principles to establish concrete regulatory mechanisms. This includes creating clear liability frameworks that assign responsibility for AI-induced harms, mandating public registers and impact assessments for high-risk AI systems used in the public sector, and establishing independent auditing bodies to verify claims of fairness and safety.

Adopting a flexible, risk-based approach, similar to the EU’s AI Act, allows for stringent oversight where risks are high (e.g., justice, healthcare) without stifling innovation in low-risk domains. However, this requires much more honesty, than we have had to date, about the risks of giving Govt contracts for our sensitive data to entities linked to human rights abuses, such as Palintir.32

Harnessing AI’s potential responsibly requires a multi-faceted strategy that combines technical solutions with strong legal and ethical guardrails including corporate governance verification of key suppliers.

The pursuit of AI benefits must directly confront its enormous resource consumption, which includes substantial electricity use, water strain, and extractives required for proliferating data centers. This environmental liability is known as AI’s Footprint.

The Risk: Training and deploying LLMs requires substantial computing power, significantly contributing to carbon emissions. The environmental burden includes direct impacts (energy, water) as well as indirect risks (stimulating excess demand for finite resources). Environmental ecosystems must be recognised as a top-level risk category.

Our Progressive Guardrails: We must ensure AI is deployed as a net-positive force for decarbonisation by prioritising high-impact applications (AI’s Handprint) over high-cost, low-impact uses.

AI Governance: Our governance framework will require mandatory efficiency labelling for hardware and AI systems to guide procurement toward less resource-intensive choices.

Algorithmic Impact Assessments (AIA): we must enforce AIA’s analogous to Environmental Impact Assessments—to evaluate the potential environmental threats of new AI systems prior to scaling and ensure that systems align with sustainability and equity goals.

The development of advanced AI risks accelerating the concentration of economic and political power within a few tech giants.

This centralised control, often held by powerful foreign entities, threatens to make AI a tool of unjust corporate dominance and compromises national sovereignty.

The Risk: Reliance on closed, proprietary models from dominant players consolidates control over the underlying infrastructure and data.

Foreign Control & Influence: Furthermore, the outsourcing of critical functions like education, defence, or social safety nets to foreign AI companies raises serious questions about national sovereignty. This concentration also leads to heightened security risks and the potential for unfair influence on regulatory frameworks.33

Progressive Guardrails: We must champion the democratisation of AI development by mandating the use of open-source models and verifiable data across public services to mitigate the risks associated with opaque, commercially developed “black box” models. This approach counteracts monopolisation and fosters innovation by reducing reliance on a few dominant players.

We should establish Public Data Sovereignty Trusts and Verifiable Data Audit (VDA) infrastructure to secure data ownership and public confidence.

We must invest in building a robust domestic public AI ecosystem to ensure that critical functions benefit the UK and our European partners and are free from direct and indirect foreign interference. The EU has shown us the way with its multi-lingual LLM foundation model – Tilde.34

Public trust in AI systems, particularly in governance, is entirely contingent upon establishing institutionalised transparency. The opacity of many complex AI systems poses a primary concern.

The Risk: As noted above, many advanced Machine Learning models operate as “black boxes,” meaning their decision-making processes are not always transparent or easily understood. This opacity makes human auditing difficult, erodes public trust, and complicates the challenging of decisions. Without transparency, it is nearly impossible to evaluate the rationale must treat Explainable AI (XAI) as both a technical solution and a societal imperative. XAI ensures systems are interpretable, transparent, and aligned with ethical standards, providing clear and understandable rationales for recommendations. We will develop frameworks to ensure individuals can obtain a factual, clear explanation of decisions, especially in cases of unwanted outcomes.

Accountability: Policies must mandate human accountability by affirming that AI models are predictive tools, not decision-makers. Human experts must provide context, interpret results, and address issues that AI systems might overlook.

Participation: We must prioritise citizen participation and inputs into policy-making to build trust and wider awareness of AI deployment.

Artificial intelligence and computational modelling offer a paradigm shift for economic and social analysis. As complementary tools, data-driven forecasting can predict what may occur with greater accuracy, while agent-based simulation provides a laboratory to explore why it occurs and test policy interventions.Together, they provide capabilities that far exceed the reach of traditional methods, giving policymakers powerful new instruments to design more responsive and effective governance for an increasingly complex world.

Agent-based simulation provides a “policy laboratory” to de-risk and optimise decision-making. Policymakers should use ABMs to conduct in silico experiments before deploying real-world policies. This allows for testing the potential second- and third-order effects of legislation, identifying unintended consequences, and evaluating the differential impacts on heterogeneous populations.

However, this transformative potential is matched by significant risks. These are not merely technical challenges of data quality or model validation; they are profound societal risks. AI’s current trajectory threatens to amplify socio-economic inequality, concentrate power, worsen environmental harms and erode democratic oversight.

The challenges of bias, transparency, and accountability are not peripheral concerns but central to the responsible deployment of these AI systems.

Implementing AI solutions for public good requires a number of different approaches and steps. The strategic path forward requires a two-pronged strategy of Proactive Capability Building and Adaptive Governance.

Key actions needed include:

Investing in modern data infrastructure to support real-time data ingestion and processing.

Establishing dedicated data science units within government agencies to develop and maintain forecasting models.

Integrating these more accurate and timely predictions into budgetary planning, infrastructure development, and crisis response protocols.

Policymakers must first actively build capabilities by investing in data infrastructure, fostering interdisciplinary research, and closing the critical skills gap. Simultaneously, they must construct robust, adaptive, and human-centric governance frameworks that establish clear principles of accountability, fairness, and transparency. This ensures that human judgment remains central to critical decisions and builds public trust through effective regulation:

Phase 1: Foundation (Ethics & Data): Mandate Algorithmic Impact Assessments and establish Public Data Sovereignty Trusts.

Phase 2: Governance (Transparency & Accountability): Implement mandatory efficiency labelling for AI systems and establish independent auditing bodies.

Phase 3: Scaling (Sovereignty & Public Investment): Invest in a robust domestic open-source AI ecosystem and mandate the use of Explainable AI (XAI) in all high-stakes public-facing systems.

The ultimate goal is not technological advancement for its own sake, but the careful and responsible integration of these tools to foster more effective, equitable, and just societies.

The choice is not whether to use AI, but whether we will allow it to concentrate power, or harness it as the most powerful tool ever created for a fairer, safer, and more sustainable world.

By integrating AI development with core progressive values—Ecological Wisdom, Social Justice, and Grassroots Democracy—and establishing comprehensive governance frameworks that prioritise transparency, sustainability, and data sovereignty, we can channel AI’s powerful potential toward creating a demonstrably better society.

AI is a communal technology and, in the case of LLMs, it is literally built on the existing corpus of all human knowledge. AI should also be a public good, let’s make sure it is.

https://www.independent.co.uk/news/silicon-valley-mark-zuckerberg-peter-thiel-god-nobel-prize-b2816511.html ︎

https://jasonhickel.substack/why-capitalism-is-fundamentally-undemocratic ︎

Bank of England: “Infusing economically med structure into machine learning methods (2025) “how economic theory can be integrated into machine learning (dels ance their interpretability and applicability for policy analysis” https://www.bankofengland.co.uk/-/media/boe/files/working-paper/2025/infusing-economically-motivated-structure-into-machine-learning-methods.pdf ︎

https://greenparty.org.uk/2024/06/26/plan-for-our-rivers-greens-say-privatise-water-companies-invest-in-sewage-infrastructure-and-give-regulator-real-teeth/ ︎

https://www.sciencedirect.com/science/article/pii/S0306261925007469 ︎

https://pml.ac.uk/news/revolutionizing-biodiversity-monitoring-the-power-of-ai-and-new-technologies/ ︎

https://www.turing.ac.uk/research/research-projects/ai-and-autonomous-systems-assessing-biodiversity-and-ecosystem-health ︎

https://www.chemistryworld.com/news/ai-enables-quicker-search-for-mofs-to-soak-up-carbon-dioxide/4022023.article ︎

https://wmo.int/media/magazine-article/future-of-flood-forecasting-technology-driven-resilience#:~:text=The%20Ministry%20is%20now%20advancing,to%20generate%20dynamic%20flood%20simulations. ︎

https://publicpolicy.google/resources/europe_ai_opportunity_climate_action_en.pdf ︎

It achieved a normalized root mean square error (NRMSE) of just 7.8%, a significant improvement over the 16.5r rate of the persistence model baseline. ︎

https://hai.stanford.edu/policy/simulating-human-behavior-with-ai-agents ︎

See e.g.:

https://www.researchgate.net/publication/228969790_The_ODD_protocol_A_review_and_first_update ︎

https://www.uni-bielefeld.de/fakultaeten/wirtschaftswissenschaften/lehrbereiche/etace/eurace@unibi/ ︎

https://www.researchgate.net/publication/314403909_Agent-Based_Modeling_of_Energy_Technology_Adoption_Empirical_Integration_of_Social_Behavioral_Economic_and_Environmental_Factors ︎

https://en.wikipedia.org/wiki/Environmental_impact_of_artificial_intelligence ︎

https://www.nuffieldfoundation.org/students-teachers/q-step ︎

https://businesscloud.co.uk/news/the-alan-turing-institute-behind-the-unrest-at-the-ai-powerhouse/#:~:text=Staff%20submitted%20a%20whistleblowing%20complaint,defined%20by%20fear%20and%20defensiveness. ︎

https://www.cgdev.org/blog/three-reasons-why-ai-may-widen-global-inequality ︎

https://blogs.lse.ac.uk/businessreview/2025/04/29/universal-basic-income-as-a-new-social-contract-for-the-age-of-ai-1/ ︎

https://healthandscienceafrica.com/2025/08/artificial-intelligence-universal-weapon-against-poverty-in-africa-tech-expert/ ︎

https://www.iod.com/news/science-innovation-and-tech/major-blockers-to-ai-adoption-in-british-business/ ︎

https://goodlawproject.org/palantir-poised-to-cash-in-on-wes-streetings-nhs-plan/ ︎

https://www.intereconomics.eu/contents/year/2025/number/2/article/big-tech-and-the-us-digital-military-industrial-complex.html ︎

https://digital-strategy.ec.europa.eu/en/library/eu-funded-tildeopen-llm-delivers-european-ai-breakthrough-multilingual-innovation ︎

Share Dialog

Peter Howitt

Support dialog

No comments yet